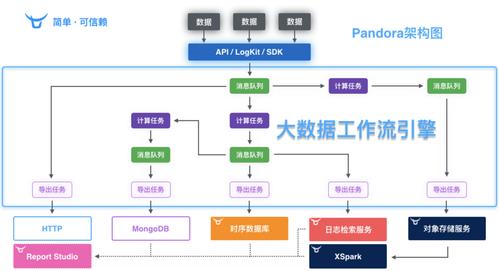

大数据应用架构设计的核心

Title: Exploring the Architecture of Big Data Applications

Introduction:

In recent years, big data has emerged as a crucial asset for organizations looking to gain valuable insights and make datadriven decisions. However, the efficient management and processing of large and complex data sets require a robust architecture. In this article, we will explore the key components and considerations in building a big data application architecture.

1. Data Storage:

The first step in designing a big data architecture is determining how and where to store the data. Traditional relational databases often struggle with the volume, variety, and velocity of big data. As a result, many organizations are adopting NoSQL databases and distributed file systems like Hadoop Distributed File System (HDFS) to store and manage largescale data sets efficiently.

2. Data Ingestion:

Data ingestion is the process of collecting and importing data into the big data platform. This step involves extracting data from various sources such as databases, files, streaming data, or APIs. Technologies like Apache Kafka and Apache Flume enable realtime data streaming, while Apache Sqoop and Apache Nifi facilitate batch data ingestion.

3. Data Processing:

Once the data is ingested, it needs to be processed to extract meaningful insights. Big data processing frameworks, such as Apache Spark and Apache Hadoop, provide distributed computing capabilities to handle massive amounts of data in parallel. These frameworks support various processing techniques like batch processing, realtime processing, and machine learning algorithms.

4. Data Transformation:

Data transformation involves converting raw data into a structured format suitable for analysis. This step includes data cleaning, normalization, aggregation, and enrichment. Tools like Apache Pig and Apache Hive enable users to perform complex data transformations using highlevel scripting languages or SQLlike queries.

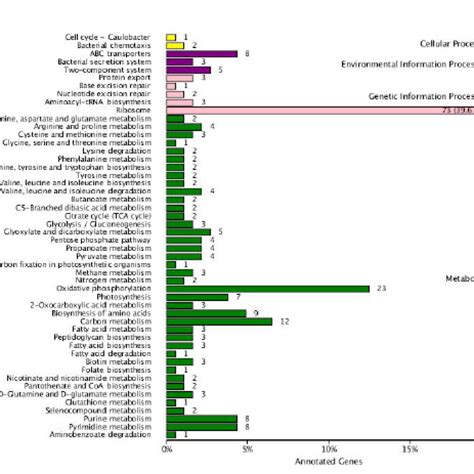

5. Data Analysis:

Data analysis is the heart of any big data application architecture. It involves applying statistical algorithms, machine learning models, and data visualization techniques to gain insights from the data. Frameworks like Apache Spark MLlib and Python libraries like scikitlearn and TensorFlow provide powerful tools for data analysis and predictive modeling.

6. Data Storage and Retrieval:

The processed and analyzed data needs to be stored efficiently for future use. Data warehouses and data lakes serve as centralized repositories for structured and unstructured data, respectively. These storage systems allow for easy retrieval and querying of data by data analysts and business users.

7. Data Security and Governance:

Ensuring data security and governance is crucial to protect sensitive information and comply with regulatory requirements. Big data platforms should enforce access controls, encryption, and auditing mechanisms. Additionally, organizations should establish data governance policies to maintain data integrity, quality, and privacy throughout the data lifecycle.

8. Scalability and Performance:

Big data applications must be able to scale horizontally to handle growing data volumes and user demands. Distributed computing architectures, cluster management systems like Apache Mesos or Kubernetes, and autoscaling mechanisms ensure high availability, fault tolerance, and performance optimization across the system.

9. Monitoring and Management:

Monitoring the performance and health of the big data infrastructure is essential for troubleshooting and optimizing resource utilization. Tools like Apache Ambari and Grafana provide monitoring and alerting capabilities, while Apache ZooKeeper helps in coordinating distributed systems and ensuring their reliability.

Conclusion:

Building a robust big data application architecture involves careful consideration of storage, ingestion, processing, analysis, and security components. The chosen technologies and frameworks should align with the specific requirements and objectives of the organization. By designing a scalable, secure, and efficient architecture, businesses can harness the power of big data to gain valuable insights and stay competitive in today's datadriven world.

Note: This article provides a highlevel overview of big data application architecture. Each component mentioned can be further explored and implemented based on specific business needs and available technologies.